The section describes synchronizing video recording with screen flashes and eeg data. The demonstration here is the same as the screen synchronization demonstration, but with a video recording added (using VideoStream.exe from the Mobi Utilities), so that section should be read first.

In the test described here, screen flashes were generated using the Psychophysics Toolbox, and this code. A phototransistor (sample circuit here, change the resistor to modify sensitivity) was taped to the screen to convert the light into voltage and was connected to the biosemi analog input box. In parallel, a conventional video camera was pointed at the screen. Two LSL streams were used. The EngineEvents stream generates a marker after every stimulus frame flip. Additionally, each time the screen changes from black to white (or vice versa), instead of the 'flip' marker, EngineEvents generates 'black' or 'white'. This allows us to confirm our ability to synchronize the stimulus light transitions to the EEG and video data using the LSL markers as reference. VideoStream.exe generates a marker for every video frame acquired, which we use to synchronize the recorded movie.

Videostream.exe outputs a compressed .asf movie file. This file can be loaded by processVideoFlashes, which asks the user to select the portion of the screen that is changing color. It then averages that region for each frame in the file, and outputs the result as frame_brightness. The function processVideoFlashes.m does require the matlab function mmread which you will need to download, unzip, and install into Matlab's search path. This is a very useful utility, so it's worth having anyway.

The test was analyzed by loading the data in MoBILAB and running this code (which takes frame_brightness as an input) and depends on this function.

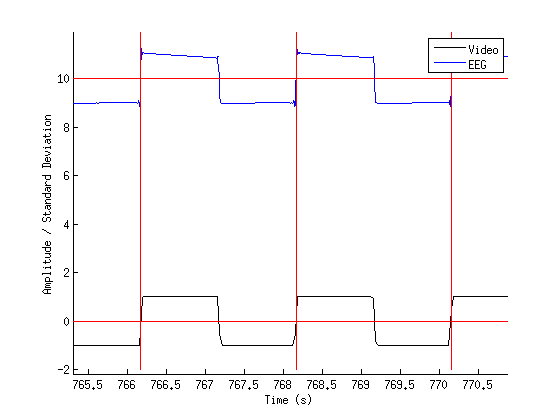

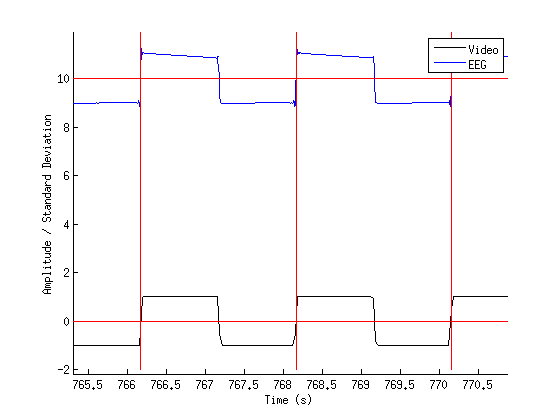

The data detail is below, with the phototransistor to EEG input on the top, and the Video Stream on the bottom.

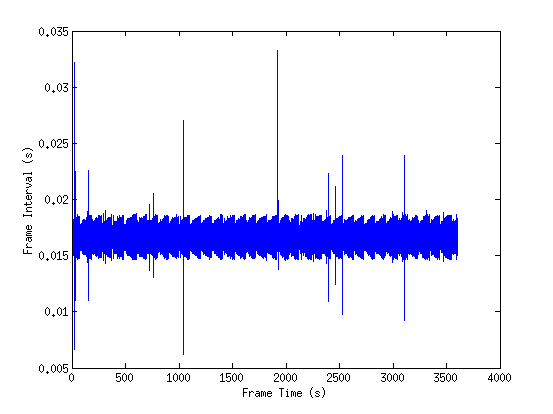

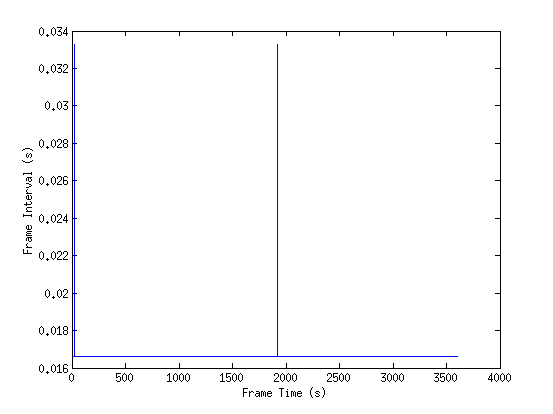

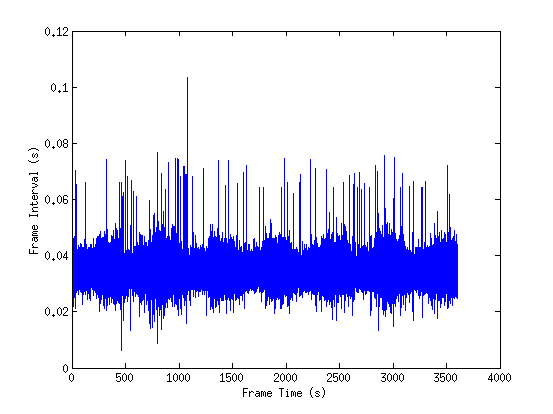

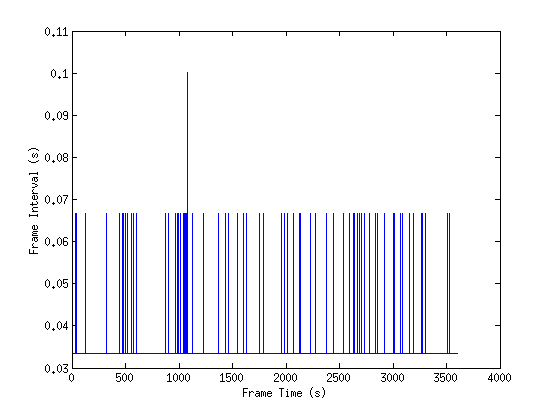

As before, we can look at the raw and corrected frame intervals for the psychophysics LSL stream.

However, this time we also want to look at the video stream. Video capture devices have the same sort of behavior as far as dropped frames go, so we do a similar analysis on that stream.

In this case, there is a larger number of dropped frames than normal. This was done intentionally (by running an extra video stream that was thrown away) in order to make sure that the analysis is robust to this kind of error.

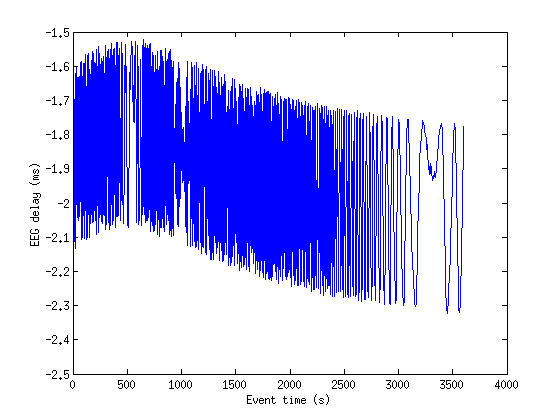

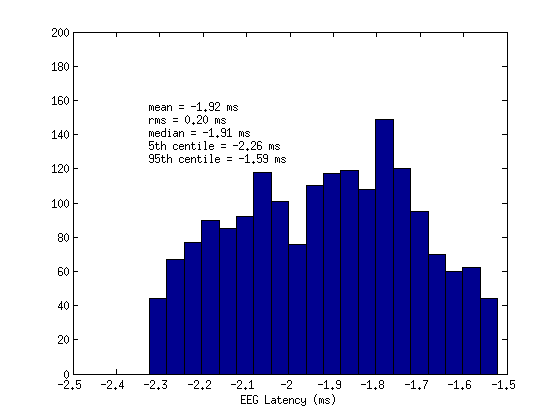

After the frame intervals are modified, we can check the synchrony for EEG. This has a latency of -2.26 to -1.92 ms, which is good. A constant typical offset of 7.72 ms was subtracted from the EEG times and 16.13 ms was added to the marker times. This doesn't perfectly zero the latency because the slightly different measurement conditions of the previous test (such as over all light levels, monitor variation with time) change the threshold crossing slightly.

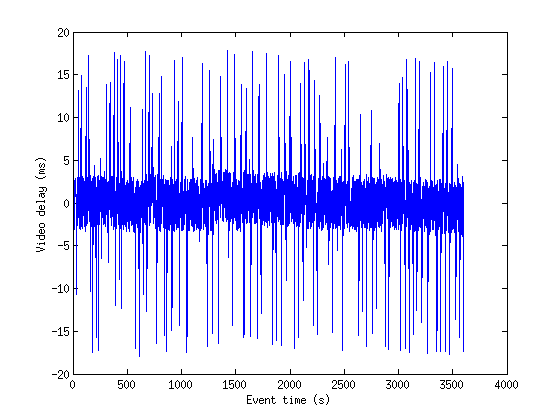

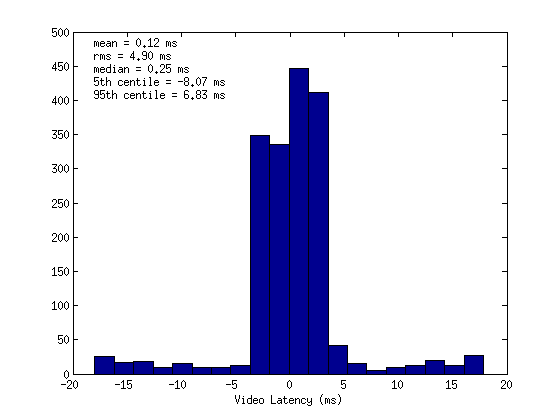

We can check the synchrony for video. This has a latency of -8.07 to 6.83 ms. This is not as good, but is the best we can reasonably expect because the camera is acquiring frames at 30 Hz (as opposed to the 2048 Hz acquisition rate of the EEG). To be more precise, a higher frame rate camera would be required. A standard offset of 28.9 ms was subtracted from the video frame times, while a standard offset of 16.13 ms was added to the event marker times.

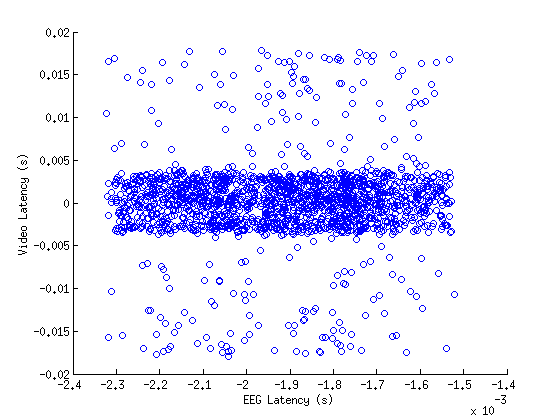

We can also look at the correlation between video and EEG latencies. There is no correlation, which tells us that the variability is caused by the recording, and not the generation of the stimuli.

The data for this test was generated on 2014/03/31.