The EEGLAB News #18

Ying Choon Wu, Ph.D.

Ying Choon Wu, Ph.D.

Associate Project Scientist, Institute of Neural Computation, UC San Diego

Email: ycwu@ucsd.edu

Lab website: https://insight.ucsd.edu/

Dr. Ying Choon Wu and her team at the Institute for Neural Computation explore higher order cognition at the intersection between mind, brain, and body. She has a passion for collaboration and for recording data in the real world. When she describes the projects she is spearheading, her enthusiasm is contagious. "I love learning new things and crossing boundaries," she explains, "... designing science experiments that can double as art experiments and vice versa, exploring new domains, such as opera or laparoscopy, and learning new techniques." Her work is diverse, exploring topics such as how artificial intelligence (AI), augmented and virtual reality, and wearable biosensing can promote ecological literacy and art, and how this technology can encourage and support creativity, learning, teamwork, community, and connection. Here, she shares details about her research:

Can you explain your research and what inspired you to study this topic?

I first attended Reed College and majored in English Literature, but went on to study Applied Linguistics at UCLA and Cognitive Science at UC San Diego. As a graduate student, my dissertation examined the impact of co-speech gestures on spoken discourse comprehension. One aspect of this work that really interested me was the possibility that information encoded in gesture-based somatomotor representations and language-based symbolic representations could jointly impact people’s understanding of one another. Since then, my research has expanded from language to explore learning, problem solving, teamwork, aesthetic experience, and more. However, the relationship between embodied experience and higher order cognition is still a fundamental question underlying all of these research trajectories.

What are your current projects?

One project, Art + Empathy Lab, is a collaboration between the Swartz Center for Computational Neuroscience (SCCN) and the Arthur C Clarke Center for Human Imagination, with support of the California Arts Council. This work examines neurocognitive and physiological correlates of empathy, attention, and emotion in order to explore how art museums can cultivate empathic understanding. We combine state-of-the-art wearable biosensors and machine perception to study individual and culturally-mediated variation in aesthetic engagement during real-world exploration of visual art with our partners at the San Diego Museum of Art. Our study was highlighted in the San Diego Museum of Art Member Magazine, February-May 2020 (pgs 26-27)!

Another study, Embodied Coding (supported by the National Science Foundation), explores how augmented and virtual reality (VR) can support human-centered, collaborative computing. (Photo: A child using an augmented reality (AR) headset during the Embodied Coding project; Image credit: Dr. Wu’s Insight website)

Another study, Embodied Coding (supported by the National Science Foundation), explores how augmented and virtual reality (VR) can support human-centered, collaborative computing. (Photo: A child using an augmented reality (AR) headset during the Embodied Coding project; Image credit: Dr. Wu’s Insight website)

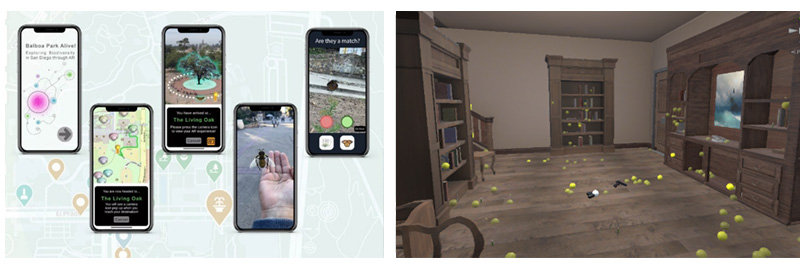

A few other projects include: Balboa Park Alive, where we aim to foster care for our region’s biodiversity by promoting ecological literacy in youth and families through the usage of augmented reality (AR) installations at Balboa Park; Visual Search in VR (supported by the National Science Foundation and the Army Research Laboratory), where we use VR, electroencephalogram (EEG), and electrocardiogram (ECG), to model ecologically valid visual search processes in 3D space using virtual reality; and

Escape Room, which is a series of puzzles in a virtual reality space that can be used to test problem solving and insight.

(Screenshots of Balboa Park Alive project (left) and Visual Search in VR project (right); Credit: Dr. Wu’s Insight website)

How did you first become interested in your field, and how did you find out about the Swartz Center for Computational Neuroscience (SCCN)?

As a Master's student in Applied Linguistics at UCLA, I was inspired by my mentor, John Schumann. Language, I realized, is more than a formal system. It represents the intersection of culture, cognition, and biology. Then, when I was a graduate student in Cognitive Science at UC San Diego, the Swartz Center was founded. I became familiar with EEGLAB during my post-doc appointment at SCCN.

How does the EEGLAB help you in your research?

How does the EEGLAB help you in your research?

Most of my projects demand the analysis of multi-modal data (EEG, ECG, eye tracking). We use EEGLAB and its plug-ins to accomplish most EEG analysis tasks. Some of the plug-ins that we use frequently include IC Label, clean_raw_data, dipfit, and Mobilab. We also use multi-modal analysis, such as fixation- or gaze-locked Event Related Spectral Perturbations.

I use the Mobilab extensively for work involving VR. While I have done a lot of work that combines EEG and eye tracking in VR, we are still just beginning to integrate motion capture into the mix. Finding a reliable, affordable, wearable ECG monitoring system has proved a challenge - but we are collaborating with my colleague, Tzyy-Ping Jung, to explore systems such as the Vivalink. (Photo: Embodied Coding session, Raikes Summer Camp, University of Nebraska, Lincoln. Credit: Robert Twomey).

I also enjoy stepping out of the lab altogether and recording data in the real world. I have done studies at the San Diego Museum of Art (SDMA) and the Lincoln Center in NYC. We are still analyzing a lot of the multi-modal data collected in those locations. These projects have seeded new collaborations. We are currently exploring the possibility of incorporating ECG measurement and analysis in a summer wellness program led by SDMA. We are also launching a new collaboration on a concert performance that incorporates EEG-guided music and visualizations.

What do you hope to accomplish in the next seven years?

What do you hope to accomplish in the next seven years?

Actually, my answer would depend on the scale of inquiry. At the level of society and culture, I hope to continue exploring how AI, augmented and virtual reality, and wearable biosensing can support art, creativity, learning, teamwork, community, and connection. At the level of cognition, I am still very interested in understanding how our capacity for perception and action informs our higher order abilities to do things such as solving problems, reasoning about and understanding complex concepts, appreciating art, and more. (Photo: Demo of the VR Escape Room in the MoBI lab at UC San Diego. Institute for Neural Computation Open House, 4/2/19)

How did some of your Collaborations come about?

I have collaborated with the Clarke center for a number of years, as there is considerable overlap in our interests in extended reality, cognitive neuroscience, art, and more. Patrick Coleman, who has moved on to Development at UC San Diego, was instrumental in facilitating our projects with the SDMA, as well as a current project with members of Psychiatry on virtual psychedelics. Our collaboration with CREATE emerged from the Embodied Coding project, which explores the impact of creative coding in VR on computational concept learning and computational thinking.

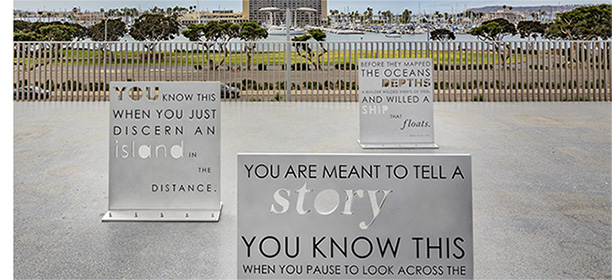

Would you like to share any other thoughts? Any fun facts about yourself that most people don’t know? I live on a sailing catamaran with my husband and daughter, in a marina off of Shelter Island. I also love poetry (early in my education, I attended Reed College and majored in English Literature). I am still involved with the poetry community. I used to be a poetry editor for Writers Resist. I assist with Kids! San Diego Poetry Annual as well – though I don’t have a lot of time for that these days, unfortunately. I have a poem installed outdoors at the San Diego Airport - "You Are Meant to Tell A Story.").

Would you like to share any other thoughts? Any fun facts about yourself that most people don’t know? I live on a sailing catamaran with my husband and daughter, in a marina off of Shelter Island. I also love poetry (early in my education, I attended Reed College and majored in English Literature). I am still involved with the poetry community. I used to be a poetry editor for Writers Resist. I assist with Kids! San Diego Poetry Annual as well – though I don’t have a lot of time for that these days, unfortunately. I have a poem installed outdoors at the San Diego Airport - "You Are Meant to Tell A Story.").

July 2024