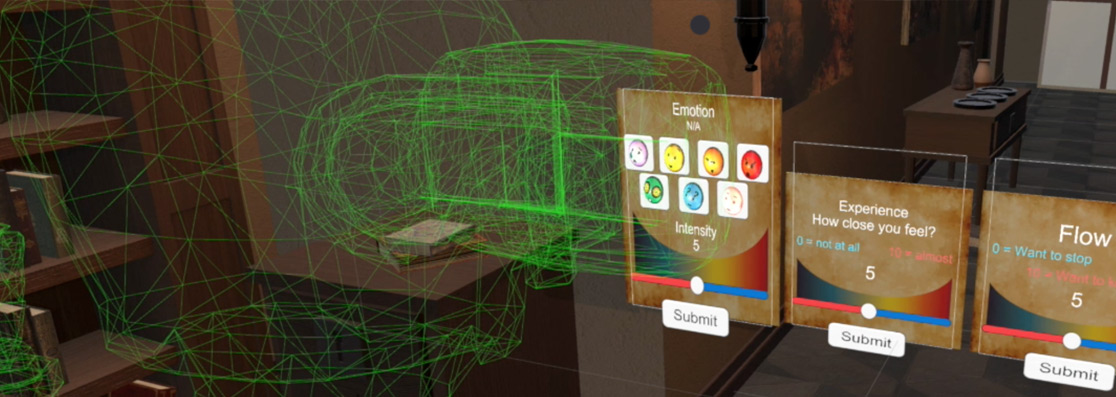

Real-World Neuroimaging: Demo of the Escape Room (In-game assessment screen)

Projects

Real-world neuroimaging

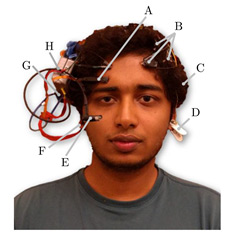

Fig. 1 A wearable multi-modal bio-sensing headset features (A) World camera, (B) EEG Sensors, (C) Battery, (D) EEG Reference Electrode, (E) Eye Camera, (F) Earlobe PPG Sensor, (G) Headphone/speaker connector, and (H) Embedded System. [Figure is copied from Siddharth, et al., 2018]

|

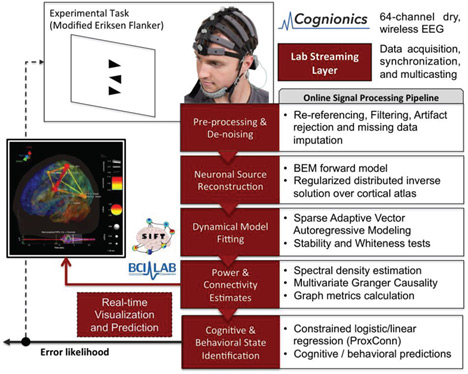

Real-time EEG analysis and modeling

Recent advances in dry-electrode electroencephalography (EEG) and wearable/wireless data-acquisition systems have ignited new research and developments of the applications of EEG for real-world cognitive-state monitoring, affective computing, clinical diagnostics and therapeutics, and brain–computer interfaces (BCI), among others. These practical applications of EEG call for further developments in signal processing and machine learning to improve real-time (and online) measurement and classification of the brain and behavioral states from small samples of noisy EEG data (Mullen et al., 2015). Our Center has recently developed a real-time software framework that includes adaptive artifact rejection (ASR), cortical source localization, multivariate effective connectivity inference, data visualization, and cognitive state classification from connectivity features using a constrained logistic regression approach (ProxConn) (Mullen et al., 2015). The figure below shows a schematic of the real-time data processing pipeline. A video demo can be found at https://www.dropbox.com/s/tfp49lbw1cpo9cf/ASR%20SIFT.mp4?dl=0

|

Real-time EEG Source-mapping Toolbox and its application to automatic artifact removal

SCCN graduate students, Shawn Hsu and Luca Pion-Tonachini have recently developed a new Matlab-based toolbox, namely Real-time EEG Source-mapping Toolbox (REST), for real-time multi-channel EEG data analysis and visualization (Pion-Tonachini, Hsu et al., EMBC 2015; Pion-Tonachini, Hsu et al., 2018). The novel REST combines adaptive artifact rejection (ASR), online recursive source separation algorithms (e.g. ORICA, Hsu et al., 2015), and machine learning approaches to automatically reject muscle, movement and eye artifacts in EEG data in near real-time (Pion-Tonachini, Hsu et al., EMBC 2015; Chang, Hsu et al., 2018; Pion-Tonachini, Hsu et al., 2018). The video demos are available at https://sccn.ucsd.edu/TP/demos.php. The open source codes of the Toolbox are available at Github). |

Brain-computer interfaces

Brain-computer interfaces (BCIs) allow users to translate their intention into commands to control external devices, enabling an intuitive interface for disabled and/or nondisabled users (Wolpaw et al., 2002). Among various neuroimaging modalities, electroencephalogram (EEG) is one of the most popular ones used for real-world BCI applications due to its non-invasiveness, low cost, and high temporal resolution (Wolpaw et al., 2002). Recently, our group at Swartz Center for Computational Neuroscience has developed state-of-the-art BCIs based on steady-state visual evoked potentials (SSVEP), an intrinsic neural electrophysiological response to repetitive visual stimulation, and achieved a new record of BCI communication speed (Information Transfer Rates of 325 bits/min). The figure below shows a high-speed BCI speller adopted from our publication in PNAS (Chen et al., 2015). More recently, our recent BCI speller obtained a high spelling rate up to 75 characters (∼15 words) per minute (Nakanishi et al., 2018). Video demos can be found at https://sccn.ucsd.edu/TP/demos.php

|

Affective computing

Affective computing (sometimes called artificial emotional intelligence, or emotion AI) aims to recognize, interpret, process, and simulate human affects. Throughout the past decade, many studies in affective computing have mainly classified human emotions using face images or videos. Recent developments in bio-sensing hardware have spurred increasing development of using physiological measurements such as electroencephalogram (EEG), electrocardiogram (ECG), galvanic skin response (GSR), heart-rate variability (HRV) to detect human valance and arousal. However, the results of these studies are constrained by the limitations of these modalities such as the absence of physiological biomarkers in the face-video analysis, poor spatial resolution in EEG, poor temporal resolution of the GSR etc. Scant research has been conducted to compare the merits of these modalities and understand how to best use them individually and jointly (Siddharth et al., 2018). Our group has been developing novel signal-processing and deep-learning-based methods to detect human emotion based on various bio-sensing and video-based modalities. We first individually evaluate the emotion-classification performance obtained by each modality. We then evaluate the performance obtained by fusing the features from these modalities, and compare its performance with those obtained by single modalities. Related journal publications

Book chapters

|

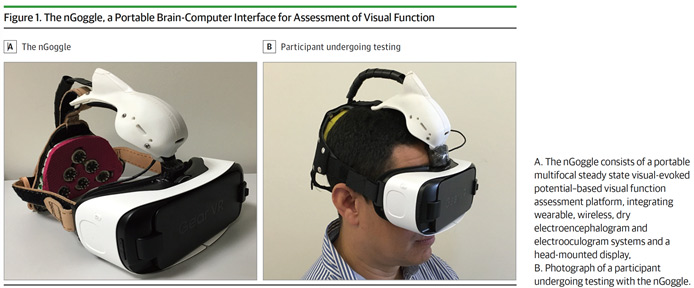

A VR-based BCI objectively assesses our vision

Assessment of loss of visual function is an essential component of the management of numerous conditions, including glaucoma, retinal and neurological disorders. The current assessment of visual-field loss with standard automated perimetry (SAP) is limited by the subjectivity of patient responses and high test-retest variability, and frequently requires many tests for effective detection of change over time. Our group has collaborated with Dr. Fellipe Medeiros of Duke University to develop a wearable device that uses a head-mounted display (HMD) integrated with wireless EEG, capable of objectively assessing visual-field deficits using multifocal steady-state visual-evoked potentials (mfSSVEP) (Nakanishi et al., 2017). In contrast to the transient event-related potentials elicited during conventional visual-evoked potentials (VEP) or multi-focal VEP examination, the use of rapid flickering visual stimulation can produce a brain response known as steady-state visual evoked potential (SSVEP), which is characterized by a quasi-sinusoidal waveform whose frequency components are constant in amplitude and phase. We have succeeded recently in employing the mfSSVEP technique to detect peripheral and localized visual field losses (Nakanishi et al., 2017). The figure below shows our nGoggle prototype from our publication in JAMA Ophthalmology in 2017. |

Real-world stress affects human performance and brain activities (funded by NSF and Pied by Dr. Ying Wu and co-PI T-P Jung)

Various real-life stressors, such as fatigue, anxiety, and stress can induce homeostatic changes in the human behavior, brain, and body. For instance, associations have been uncovered between achievement on tests of memory and attention and the experience of everyday stressors such as poor sleep (Gamaldo, et al., 2010) or minor stressful events (Neupert, et al., 2006; Sliwinski, et al., 2006). Most research paradigms in cognitive neuroscience have been compelled to dismiss the influence of such stressors as uncontrollable noise. However, with the advent of inexpensive, wearable biomonitors and advances in wireless and cloud-based computing, it is becoming increasingly feasible to detect and record fluctuations in daily experience on a 24/7 basis and relate them to human performance across a broad range of perceptual, cognitive and motor functions. Our study aims to examine how everyday experience relates to intra-individual variability in performance on cognitively demanding tasks. Using low-cost, unobtrusive, wearable biosensors and mobile, cloud-based computing systems, a Daily Sampling System (DSS) is implemented to measure day-to-day fluctuations along a number of parameters related to cognitive performance, including sleep quality, stress, and anxiety. Participants are scheduled for same day experimental problem-solving sessions in cases when their daily profile suggests optimal, fair, or unfavorable conditions for achievement. This approach affords a comparison within the same individuals of variability in performance measures as a function of their daily state. During scheduled sessions, classic stress induction techniques are integrated into an engaging, realistic Escape Room challenge implemented in virtual reality (VR) while continuous EEG, ECG, eye gaze, pupillometry, and HR/HRV are recorded and synchronized. We hypothesized that problem-solving strategies and outcomes will be modulated to different degrees across individuals by recent experiences of stress or fatigue. Further, on the basis of the project leaders' recent work, it is anticipated that vulnerable versus resilient individuals may exhibit different patterns of both resting-state and task-related EEG dynamics. From this line of findings, it is possible to begin modeling the impact of variations in daily experience on neurocognitive processes. The figure below shows the experimenter’s view of the VR Escape Room and the subject’s visual field through the HTV Vive HMD. A video demo can be found at https://sccn.ucsd.edu/TP/demos.php. |

A Brain-Computer Interface for Cognitive-state monitoring

Most research in the field of Brain-Computer Interfaces this area is trying to develop research for a patient’s failed nervous system, such as patients who are paralyzed with no control over their muscles. However, a much larger population of ‘healthy’ people who suffer momentary, episodic or progressive cognitive impairments in daily life have, ironically, been overlooked. For example, as the last seventy years of research in human vigilance and attention has shown, humans are not well suited for maintaining alertness and attention under monotonous conditions, particularly during the normal sleep phase of their circadian cycle. Catastrophic errors can result from momentary lapses in alertness and attention during periods of relative inactivity. Many people, not just patients, can benefit from a prosthetic system that continuously monitors fluctuations in cognitive states in the workplace and/or the home (Lin et al., 2008). Our research team has published several fundamental studies that lead to a practical BCI and demonstrated the feasibility of accurately estimating individual’s cognitive states, as indexed by changes in their level of task performance, by monitoring the changes in EEG power spectra or other measures. We have developed apparatus and real-time signal processing and machine-learning algorithms to mitigate cognitive fatigue and attentional lapses. Below is a list of publications. Related journal publications

|

human behavior may exist. It remains unclear how well the current knowledge of human brain function translates into the highly dynamic real world (

human behavior may exist. It remains unclear how well the current knowledge of human brain function translates into the highly dynamic real world (